Measuring the implicit biases we may not even be aware we have

Measuring the implicit biases we may not even be aware we have

When most people think of bias, they imagine an intentional thought or action – for example, a conscious belief that women are worse than men at math or a deliberate decision to pull someone over because of his or her race. Gender and race biases in the United States have historically been overt, intentional and highly visible. But, changes to the legal system and norms guiding acceptable behavior in the U.S. have led to clear reductions in such explicit bias.

Unfortunately, we still see disparities in health, law enforcement, education and career outcomes depending on group membership. And many large-scale disparities we see in society also show up in small-scale studies of behavior. So, how are these inequalities sustained in a country that prides itself on egalitarianism?

Of course, overt sexists and racists still exist and explicit biases are important. However, this isn’t how many social and organizational scientists like us currently understand prejudice – negative attitudes toward members of a social group – and stereotyping – beliefs about the characteristics of a social group. Our field is working to understand and measure implicit bias, which stems from attitudes or stereotypes that occur largely outside of conscious awareness and control.

How to reveal biases we may not know we have

In many cases, people don’t know they have these implicit biases. Much like we cannot introspect on how our stomachs or lungs are working, we cannot simply “look inside” our own minds and find our implicit biases. Thus, we can only understand implicit bias through the use of psychological measures that get around the problems of self-report.

There are a number of measures of implicit bias; the most widely used is called the Implicit Association Test (IAT; you can try one here). Researchers have published thousands of peer-reviewed journal articles based on the IAT since its creation in 1998.

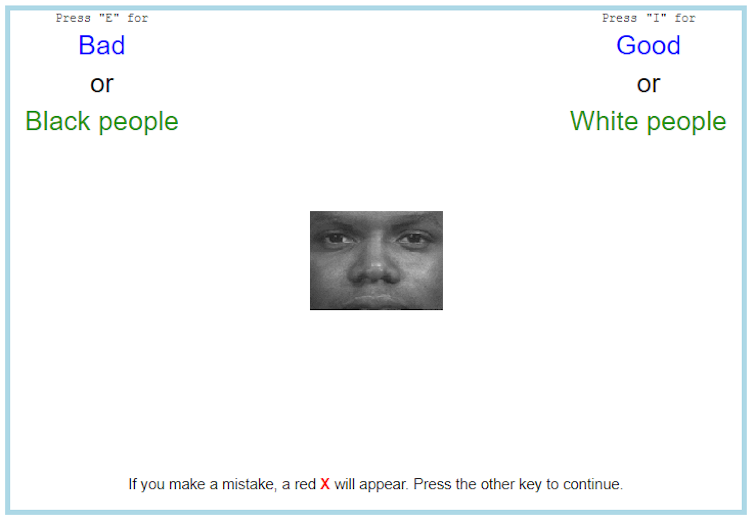

The IAT measures the strength of associations between social groups (for instance, black and white people) and evaluations (such as good and bad). Just as you likely have a strong mental link between peanut butter and jelly, or doctor and nurse, our minds make links between social groups (like “women”) and evaluations (“positive”) or stereotypes (“nurturing”).

When taking an Implicit Association Test, one rapidly sorts images of black and white people and positive and negative words. The main idea is that making a response is easier when items that are more closely related in memory share the same response key. In one part of the test, black faces and negative words share the same response key, while white faces and positive words share a different response key. In another part of the test, white faces and negative words share the same response key, and black faces and positive words share a different response key. The extent to which one is able to do the white + good version of the test more easily than the black + good version reflects an implicit pro-white bias.

Pro-white implicit biases are pervasive. Data from millions of visitors to the Project Implicit website reveal that, while about 70 percent of white participants report having no preference between black and white people, nearly the same number show some degree of pro-white preference on the IAT. Other tests reveal biases in favor of straight people over gay people, abled people over disabled people and thin people over fat people, and show that people associate men with science more readily than they associate women with science.

Do IAT scores relate to real-world behavior?

Another central question about implicit bias and the IAT is how it relates to discriminatory behavior. Arguably, what people actually do is most important, particularly when trying to understand how individual biases might lead to societal disparities.

And, in fact, researchers have demonstrated that people’s scores on the IAT predict how they behave. For example, one study showed that physicians with higher levels of implicit race bias were less likely to recommend appropriate treatment for a black patient than a white patient with coronary artery disease. A meta-analysis of more than 150 studies also supports the idea that there is a reliable relationship between implicit bias, measured by the IAT, and real-world behavior.

This is not to say, however, that there’s a one-to-one correspondence between implicit bias and behavior; someone with strong pro-white implicit bias might sometimes hire a black employee, and someone with little or no implicit pro-white bias might sometimes discriminate against a black person in favor of a less qualified white person.

While the link between race bias and behavior is robust, it is also fairly small. But small does not mean unimportant. Small effects can have cumulative consequences at both the societal level (across lots of different people making decisions) and at the individual level (across lots of different decisions that one person makes). And some implicit biases are more related to behavior than others; for example, implicit political preferences have a very strong relationship with voting behavior.

Certainly more work is needed to understand the precise conditions under which the IAT will predict behavior, and how strongly, and for what attitudes. But in the aggregate, across people and settings, there is a substantial body of evidence indicating that the IAT is related to behavior.

With or without a test, implicit bias exists

The idea that people have associations in their minds, particularly in socially sensitive domains, that contradict their self-reported beliefs is well-established within the social sciences. But there remain important open questions about how best to identify and quantify such implicit biases and when and how implicit biases in people’s minds translate into meaningful, real-world behavior.

The IAT has withstood constant criticism since its creation in 1998. These critiques have led to improvements of the measure and the way it is scored, as well as the tempering of early claims and the creation of new measurement procedures. That’s the way a healthy science progresses. As a result of criticism, the IAT is one of the best-understood psychological measures in use by social scientists.

Even if it were to turn out that our current measures of implicit bias are problematic, that would have little bearing on whether or not implicit bias exists. Mental links between social groups and evaluations and attributes are real. Bias exists. And while learning about implicit bias can be an important step in initiating behavior change for some people, there is no published evidence that awareness alone is an antidote to the influence of implicit bias. To see a reduction in bias-based disparity, it is essential that we develop and implement empirically tested interventions – specific tools we can use to produce egalitarian behavior.![]()

Kate Ratliff, Assistant Professor of Psychology, University of Florida and Colin Smith, Assistant Professor of Psychology, University of Florida

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Không có nhận xét nào: